Calibrating COVID-19 susceptible-exposed-infected-removed models with time-varying effective contact rates

We describe the population-based SEIR (susceptible, exposed, infected, removed) model developed by the Irish Epidemiological Modelling Advisory Group (IEMAG), which advises the Irish government on COVID-19 responses. The model assumes a time-varying effective contact rate (equivalently, a time-varying reproduction number) to model the effect of non-pharmaceutical interventions. A crucial technical challenge in applying such models is their accurate calibration to observed data, e.g., to the daily number of confirmed new cases, as the past history of the disease strongly affects predictions of future scenarios. We demonstrate an approach based on inversion of the SEIR equations in conjunction with statistical modelling and spline-fitting of the data, to produce a robust methodology for calibration of a wide class of models of this type.

Memory-cognizant generalization to Simon's random-copying neutral model

Simon’s classical random-copying model, introduced in 1955, has garnered much attention for its ability, in spite of an apparent simplicity, to produce characteristics similar to those observed across the spectrum of complex systems. Through a discrete-time mechanism in which items are added to a sequence based upon rich-gets-richer dynamics, Simon demonstrated that the resulting size distributions of such sequences exhibit power-law tails. The simplicity of this model arises from the approach by which copying occurs uniformly over all previous elements in the sequence. Here we propose a generalization of this model which moves away from this uniform assumption, instead incorporating memory effects that allow the copying event to occur via an arbitrary kernel. Through this approach we first demonstrate the potential to determine further information regarding the structure of sequences from the classical model before illustrating, via analytical study and numeric simulation, the flexibility offered by the arbitrary choice of memory. Furthermore we demonstrate how previously proposed memory-dependent models can be further studied as specific cases of the proposed framework.

Hierarchical route to the emergence of leader nodes in real-world networks

A large number of complex systems, naturally emerging in various domains, are well described by directed networks, resulting in numerous interesting features that are absent from their undirected counterparts. Among these properties is a strong non-normality, inherited by a strong asymmetry that characterizes such systems and guides their underlying hierarchy. In this work, we consider an extensive collection of empirical networks and analyze their structural properties using information theoretic tools. A ubiquitous feature is observed amongst such systems as the level of non-normality increases. When the non-normality reaches a given threshold, highly directed substructures aiming towards terminal (sink or source) nodes, denoted here as leaders, spontaneously emerge. Furthermore, the relative number of leader nodes describe the level of anarchy that characterizes the networked systems. Based on the structural analysis, we develop a null model to capture features such as the aforementioned transition in the networks’ ensemble. We also demonstrate that the role of leader nodes at the pinnacle of the hierarchy is crucial in driving dynamical processes in these systems. This work paves the way for a deeper understanding of the architecture of empirical complex systems and the processes taking place on them.

A complex networks approach to ranking professional Snooker players

A detailed analysis of matches played in the sport of Snooker during the period 1968-2020 is used to calculate a directed and weighted dominance network based upon the corresponding results. We consider a ranking procedure based upon the well-studied PageRank algorithm that incorporates details of not only the number of wins a player has had over their career but also the quality of opponent faced in these wins. Through this study we find that John Higgins is the highest performing Snooker player of all time with Ronnie O’Sullivan appearing in second place. We demonstrate how this approach can be applied across a variety of temporal periods in each of which we may identify the strongest player in the corresponding era. This procedure is then compared with more classical ranking schemes. Furthermore, a visualization tool known as the rank-clock is introduced to the sport which allows for immediate analysis of the career trajectory of individual competitors. These results further demonstrate the use of network science in the quantification of success within the field of sport.

Identification of skill in an online game: The case of Fantasy Premier League

In all competitions where results are based upon an individual’s performance the question of whether the outcome is a consequence of skill or luck arises. We explore this question through an analysis of a large dataset of approximately one million contestants playing Fantasy Premier League, an online fantasy sport where managers choose players from the English football (soccer) league. We show that managers’ ranks over multiple seasons are correlated and we analyse the actions taken by managers to increase their likelihood of success. The prime factors in determining a manager’s success are found to be long-term planning and consistently good decision-making in the face of the noisy contests upon which this game is based. Similarities between managers’ decisions over time that result in the emergence of ‘template’ teams, suggesting a form of herding dynamics taking place within the game, are also observed. Taken together, these findings indicate common strategic considerations and consensus among successful managers on crucial decision points over an extended temporal period.

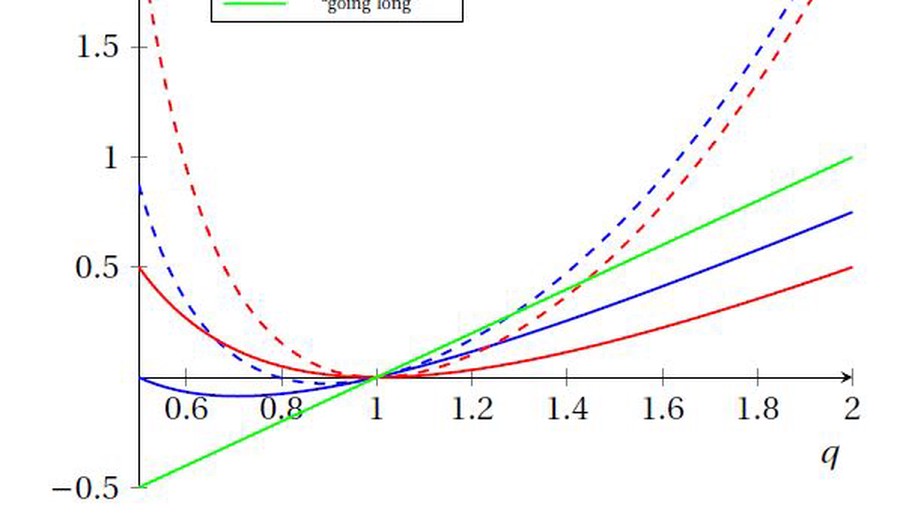

A Generalized Framework for Simultaneous Long-Short Feedback Trading

We present a generalization of the Simultaneous Long-Short (SLS) trading strategy described in recent control literature wherein we allow for different parameters across the short and long sides of the controller; we refer to this new strategy as Generalized SLS (GSLS). Furthermore, we investigate the conditions under which positive gain can be assured within the GSLS setup for both deterministic stock price evolution and geometric Brownian motion. In contrast to existing literature in this area (which places little emphasis on the practical application of SLS strategies), we suggest optimization procedures for selecting the control parameters based on historical data, and we extensively test these procedures across a large number of real stock price trajectories (495 in total). We find that the implementation of such optimization procedures greatly improves the performance compared with fixing control parameters, and, indeed, the GSLS strategy outperforms the simpler SLS strategy in general.

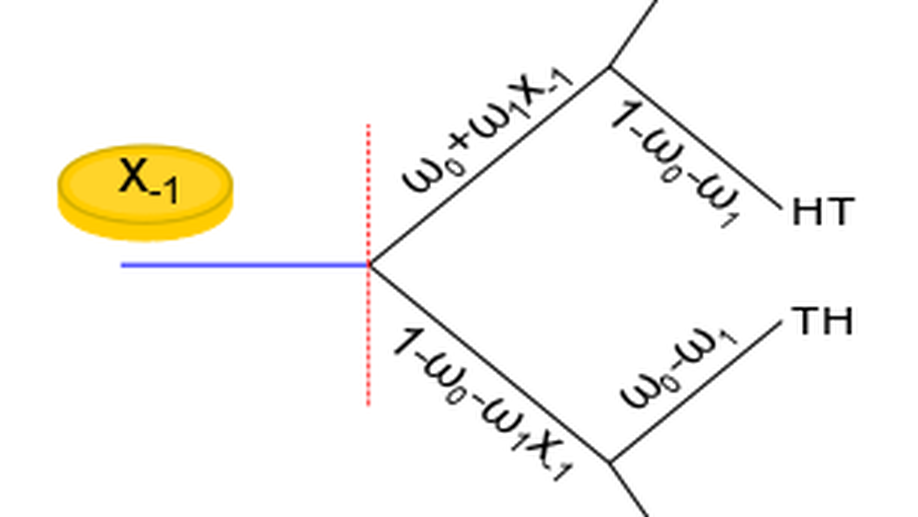

A generalization of the classical Kelly betting formula to the case of temporal correlation

For sequential betting games, Kelly’s theory, aimed at maximization of the logarithmic growth of one’s account value, involves optimization of the so-called betting fraction $K$. In this paper, we extend the classical formulation to allow for temporal correlation among bets. For the example of successive coin flips with even-money payoff, used throughout the paper as an example demonstrating the potential for this new research direction, we formulate and solve a problem with memory depth $m$. By this, we mean that the outcomes of coin flips are no longer assumed to be i.i.d. random variables. Instead, the probability of heads on flip $k$ depends on previous flips $k-1,k-2,…,k-m$. For the simplest case of $n$ flips, even-money payoffs and~$m = 1$, we obtain a closed form solution for the optimal betting fraction, denoted $K_n$, which generalizes the classical result for the memoryless case. That is, instead of fraction $K = 2p-1$ which pervades the literature for a coin with probability of heads $p \ge 1/2$, our new fraction $K_n$ depends on both $n$ and the parameters associated with the temporal correlation model. Subsequently, we obtain a generalization of these results for cases when $m > 1$ and illustrate the use of the theory in the context of numerical simulations.

Quantifying uncertainty in a predictive model for popularity dynamics

The Hawkes process has garnered attention in recent years for its suitability to describe the behavior of online information cascades. Here, we present a fully tractable approach to analytically describe the distribution of the number of events in a Hawkes process, which, in contrast to purely empirical studies or simulation-based models, enables the effect of process parameters on cascade dynamics to be analyzed. We show that the presented theory also allows making predictions regarding the future distribution of events after a given number of events have been observed during a time window. Our results are derived through a novel differential-equation approach to attain the governing equations of a general branching process. We confirm our theoretical findings through extensive simulations of such processes and apply them to empirical data obtained from threads of an online opinion board. This work provides the ground to perform more complete analyses of the self-exciting processes that govern the spreading of information through many communication platforms, including the potential to predict cascade dynamics within confidence limits.

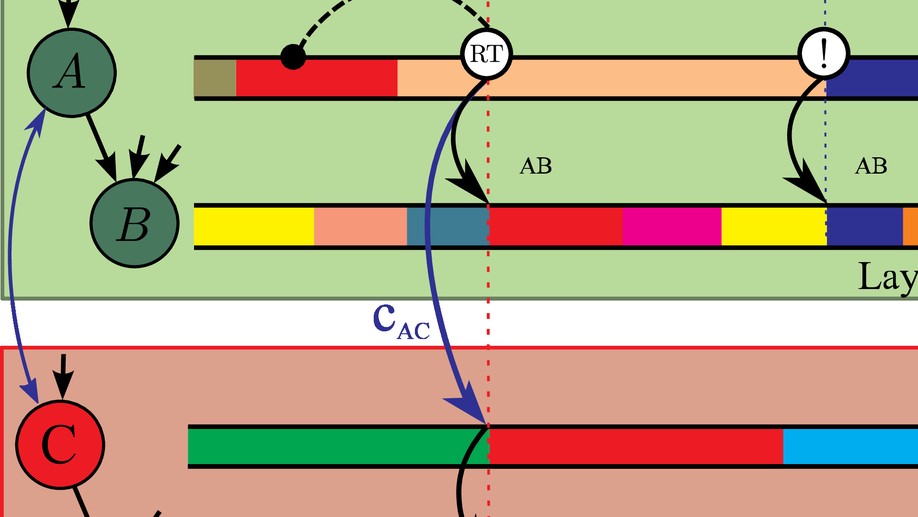

Spreading of Memes on Multiplex Networks

A model for the spreading of online information or ‘memes’ on multiplex networks is introduced and analyzed using branching-process methods. The model generalizes that of (Gleeson et al 2016 Phys. Rev. X) in two ways. First, even for a monoplex (single-layer) network, the model is defined for any specific network defined by its adjacency matrix, instead of being restricted to an ensemble of random networks. Second, a multiplex version of the model is introduced to capture the behavior of users who post information from one social media platform to another. In both cases the branching process analysis demonstrates that the dynamical system is, in the limit of low innovation, poised near a critical point, which is known to lead to heavy-tailed distributions of meme popularity similar to those observed in empirical data.